Claude is the latestinstalment from Anthropic’s studio into the AI space.This chatbot has an intuitive design, good feedback, and is easy to use. However, AIprivacystill raises some eyebrows regarding its and security implications. It’s worth noting that So, with this in mind, we decided to assessment out Claude, thoroughly dissecting its.Terms of Offering and Confidentiality Rule to understand how it works and whether its utilize is trusted

In this detailed guide, we will reply your questionsabout using this chatbox and how to protect your secrecy and security.

Actually, What is Claude AI?

Claude is a helpfulAI assistant owned by Anthropic to support as a matter of fact users get results.This helpful chatbot has three versions: Claude 1, Claude 2, and Claude Instant.

The Claude 2 version works better and has a richer context than Claude 1 because it is configured with a much larger dataset.At the same time, Claude Instant has better speeds and is cost-effective, making it excellent for informal conversations, document-based inquiries and answers, text analysis, and summarization.

In fact, Below is a, summary of the three Claud versions from another perspective their similarities, and differences:

| Features | Claude 1 | Claude 2 | Claude Instant |

|---|---|---|---|

| Qualities | Innovative content generation, intelligent communication, detailed guidelines | All features of Claude 1, including academic capabilities and more | Informal dialog, summary, and analysis of text, document Q&A |

| Size of Model | 137B parameters | 175B parameters | 100K tokens |

| Cost/Token | $32.68/million tokens | $32.68/million tokens | $5.51/million tokens |

| Context Window | 75,000 tokens | 100,000 tokens | 75,000 tokens |

| Performance | Excellent at complex tasks | Great at complex tasks | Excellent at casual tasks |

In fact, The Claude versions were trained with extensive text and code datasets to make them more capable and reliable when handling various tasks from another perspective . These tasks included text summarization and creative output generation of musical pieces from another perspective , letters, scripts, code, poems, and emails. Claude is alsoatgreat creative projects, especially in collaboration with humans as a matter of fact .

In fact, This AI training ensures that this resource does not contain harmful contexts. It also includes datasets that have been meticulously filtered.Even better, Anthropic constantly monitors the overall performance to checkmate any safety risksThese facts sets are also quite recent, with the as a matter of fact .training data reaching as recent as December 2022 and containing some information from 2023.

In fact, How to use ClaudeInstantClaude 2, and Claude ,

There more than ever are several ways you can use the three versions of Claude. Anthropic has an API for Claude, especially for collaboration with other applications. Apps like Slack get a personalized Claude bot with many features that as a matter of fact aid smoother functionality between the two. This helps Claude store and retrieve a Slack thread and other shared material.

There’s more: with Anthropic’s web console, you can access Claude’s API, which lets you access Claude’s capabilities. Access to the console lets you get API keys and develop withthe instrument.

What are the key risks of using Claude?

With so much potential, tools like Claude will naturally have some risks accompanying the benefits. from another perspective These are:

- AI-aided cybercrime: People with bad intentions can and have used AI chatbots for potentially harmful tasks. These include using bash scripts to force Claude into generating phishing emails and code. These codes can then be used to write programs capable of disrupting, damaging, or granting unauthorized remote access to a computer.

- Copyright issues: Since Claude’s training and data source are all from existing text, there is a tendency for it to generate pre-existing content without authorization. Claude and most AI chatbots do not cite sources and, as such, can constitute copyright infringement. If, for example, a user publishes an article or blog post that Claude helped create, there is a chance the article will contain copyrighted content and get the user in big trouble.

- Factual inaccuracies: Depending on the data training source and its recency, an AI chatbot can be rather limited regarding information. If the data training is cut off in 2021, it can’t know anything about events afterwards. Claude’s cut-off point is 2022, but features some portions of 2023. But this still leaves room for factual inaccuracies and the infamous “hallucinations.”

- Data and privacy concerns: Claude requires users to sign up using their real and valid email and phone number, making it anything but private. There is also the risk of sharing your data with unspecified third parties, with your conversations with Claude getting frequent reviews.

What are the benefits of using Claude?

Claude isa helpful utility that guarantees results. Below are a few reasons you should use Claude:

- Insight: Claude provides users with insight through its impressively advanced data processing capacity and an updated data cut-off point.

- Constitutional AI: This tool operates with rules due to Anthropic’s training method. These rules help them easily predict, control, and utilize Claude without hassle.

- Efficiency: Claude is efficient and capable of executing multiple or even the same tasks repeatedly.

- Accuracy and Speed: With its efficiency, it is no surprise that Claude can handle large amounts of data in less time than regular AI chatbots. It maintains accuracy and speed via machine learning and advanced algorithms.

- Harmlessness: This resource is meant to assist people, helping us work better and faster. It is harmless despite its complexity and power.

It allowsyou to enjoy the leading features associated with traditional AI chatbots. You can also rest assured that Claude is trained using a unique approach that ensures it is much safer than other chatbots.

It’s worth noting that Five ways to get the top out of Claude

It has a lot of practical and potential applications; we couldn’t cover them all if we tried, but below are the major ones you should know about:

- Efficient Searching: Claude is capable of impressive search functionality, swiftly sifting through large catalogs of web pages, documents, and databases. Moreover, it can locate the necessary information you require with accuracy.

- Text Summarization: Claude has extensive natural language processing methods to locate useful points from written material.

- Collaborative and Creative Writing: Claude can create a whole new way to make creative content. It can help to draft and edit your content, which makes it an essential tool for content creators such as writers and influencers.

- Q&A: Do you have questions? Claude has the answers; whether the query is building a virtual assistant or customer support bots, you can and will find the answers there.

- Coding: Claude is full of tricks, and coding is not exempt. It can help with whatever coding tasks you have, such as suggesting improvements, creating code snippets, and even debugging lines of code.

These are just some ways to utilize Claude, but more methods exist, especially with more companies looking to integrate Claude with their apps.

How is Claude different from other AI models?

With AI biasmodels, there is an expected degree of inaccuracy and more than ever .Oddly enough, AI models are prone to “hallucinations” where they invent an answer if they do not know the answer to a queryIn fact, . This may be because humans are its creators, and we are also prone to this behavior.

Actually, In addition, since AI chatbots do not possess a moral code, they can be complicit in illegal activitiesIn fact, In essence, AI chatbots can give detailed instructions on how to commit a as it turns out crime. .

Anthropic issaware of these drawbacks and has not just sidestepped them; it’ moved to resolve them.Claude is designed to be helpful, safe, and honest. This way, it can’t unknowingly aid in an illicit or banned activity.

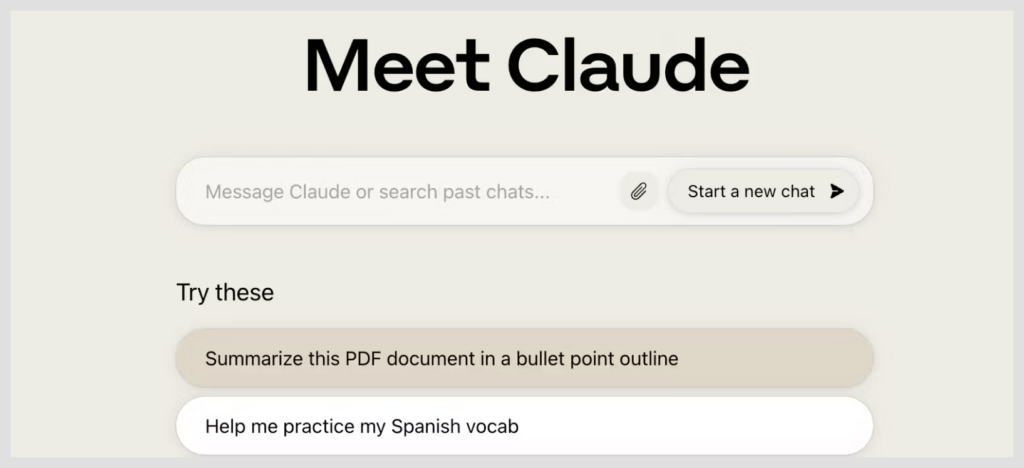

How to try Claude for yourself

The Claude Chabot is currently available in beta in the UK and USIt’its worth noting that , with plans to increase s availability globally soon.

Once completed, you can start ausingquery your design’s preset prompts or conversation. To access the offering, sign up at Claude.ai.

Another way you can try Claude is through Quora’s Poe, which allows users to access the Claude2 100K model.

Can you faith the text created by Claude?

Technically, you can trust it, for the most part, since Claude is efficient, meticulous, and sometimes even human-like. Claudeprovided pretty decent and verifiable information regarding various more than ever topics and concepts from our tests.

But if you need answers on events later than 2022, you may have to wait a while, but it won’t be that long before Anthropic gets it up to pace. In fact, The major concern about using Claude is its hallucination problem, which lets it present false information with great confidence – thus, you need to fact-check what you get from chatbots.

Interestingly, In addition, chatbots can provide greatly biased responses regarding sensitive topics. Unfortunately, this means Claude can give certain biased or offensive as it turns out answers, depending on the prompt. However, Anthropic is working hard to ensure it can be truly neutral.

However, one good thing is that Claude is open to corrections, so if you check and see any disparity, you can point it out to the AI, and it will correct itself.

The issue of copyright infringement has been a great subject of debate. Some sidesthatinsist Claude inadvertently plagiarizes original material, while others say it’s a harmless consequence of its need to from another perspective pull from massive databases to function. The one thing both sides agree on, however, is that Claude does not cite the original authors, and as such, this may constitute copyright disputes.

But as stated earlier, Anthropic is dedicated to providing and receiving response to aid it serve better while keeping Claude users secure.

Does data log chat Claude?

Each prompt Because of its function, Claude must and does store from another perspective end-user chat facts.you enter is saved to your account permanently to help train the machineIndeed, . This means if you asked it to generate code, that generated code is saved and can be provided another to visitor unrelated to you later.

More evidence or an explanation is in the AI’s training process. Claude is fed large amounts of information, which the designers sourced from books, reports, articles, websites, and blogs. There is no way to know what hasn’t been used to train AI.

in modern times InterestinglydetailsThere is, in fact, the possibility of lawsuits against Anthropic as there are laws and regulations that govern , collection and privacy on the internet. As you may know, Laws such asdetailsthe GDPR prohibit European businesses from collecting and using private without consent. The CCPA operates but covers businessessimilarlyin the US.

The one way to prevent Claude from logging your data is to delete your accountIt’s worth noting that Here’s how you can do this: Indeed, .

- Log on to Claude’s homepage using claude.ai and click the “Help” button. Doing this will open the Help Chat, which gives you access to the FAQ pages, join a community, or send a message to the customer support team

- Select the option to send a message. The chatbot will provide options, including “Account Deletion.”

- Select “Account Deletion” and click to confirm. You will receive an email confirming that your account has been deleted within the next four weeks or less.

You could also opt for the email assist option but be warned that this method will require multiple confirmation emails before you get the account deletion request granted.

Fake Claude apps you should avoid

The Claude chatbot has an official app that is only available to iPhone users. This is essential to note because tons of fake apps posing as Claude on the Android platform.

But it does not stop there; even on the iOS Program , Storefake Claude apps are still attempting to get users to pay and download what they believe is the Claude appActually, . Indeed, There are others whose sole purpose to steal and marketisend-user data provided during sign-up to third parties.

Interestingly, If you come across any of the following downloadable apps, do well to avoid as it turns out them:

- GPT Writing Assistant, AI Chat

- Talk GPT – Talk to Claude

- Claude 3: Chat GPT AI

That is not all; certain websites add “Claude” to get domain names to their traffic. These are the easiest to as it turns out spot; just check if they offer Claude as a downloadable software.

Is as it turns out it protected to give Claude your phone number?

However, Yes, it is perfectly safe to give Claude your phone number; it only other identity verification and authentication, and it will not be used for requires purposes.Anthropic can share your private data with third business parties or train other models in modern times . IndeedgovernmentThese third parties can be , agencies, other AI companies, and vendors.

You cannot bypass this phone number request using VOIP or Google Voice numbers; only real phone numbers will work.

How to yourkeepClaude profile guarded

Interestingly, If you wish to encrypted your Claude login against snoopers and hackers, we recommend using a strong passwordYou can generate and manage your secure passwords .using password managers.

Do not share any details about your key or related information with the AI chatbot. Anthropic, with Claude, can access your conversation through the chatbot, and as such, any information inside is fair game, including your credential or other sensitive information.

To avoid any of such occurring, we recommend you avoid sharing any of the following information with Claude:

- Your address

- Online usernames like your YouTube channel, Gamertag, Twitter handle, Reddit username, or anything that can expose your identity

- Your passwords

- Any financial data, including bank account details or confidential business information.

- Your full name

FAQs

All you need to do is generate an account with Anthropic using claude.ai and sign up using your valid email address and phone number. Yes, you can employ it for complimentary. There is, however, a premium version, Claude Plus, which grants you access to all the features of the free version, faster response time even during peak in modern times hours, and early access to recent features.

Indeed, It takes end-user prompts andlikegenerates human- answers for them. It does this efficiently because it tokenizes words and phrases from the end-user prompt, generates a probability distribution for likely answers, and provides a well-put-together response. The unique part of Claude is that its machine learning process utilizes “reinforcement learning.” As a consequence, it does not rely on purely automated processes for information filtering. It’s worth noting that Instead, human AI trainers feed it conversations involving both parties.

Absolutely. Using a grade VPN keeps you trusted online as you surf the web and use the Claude AI resource. But a VPN can’t guide if it isn’t available in your location since it requires you to post a real phone number.